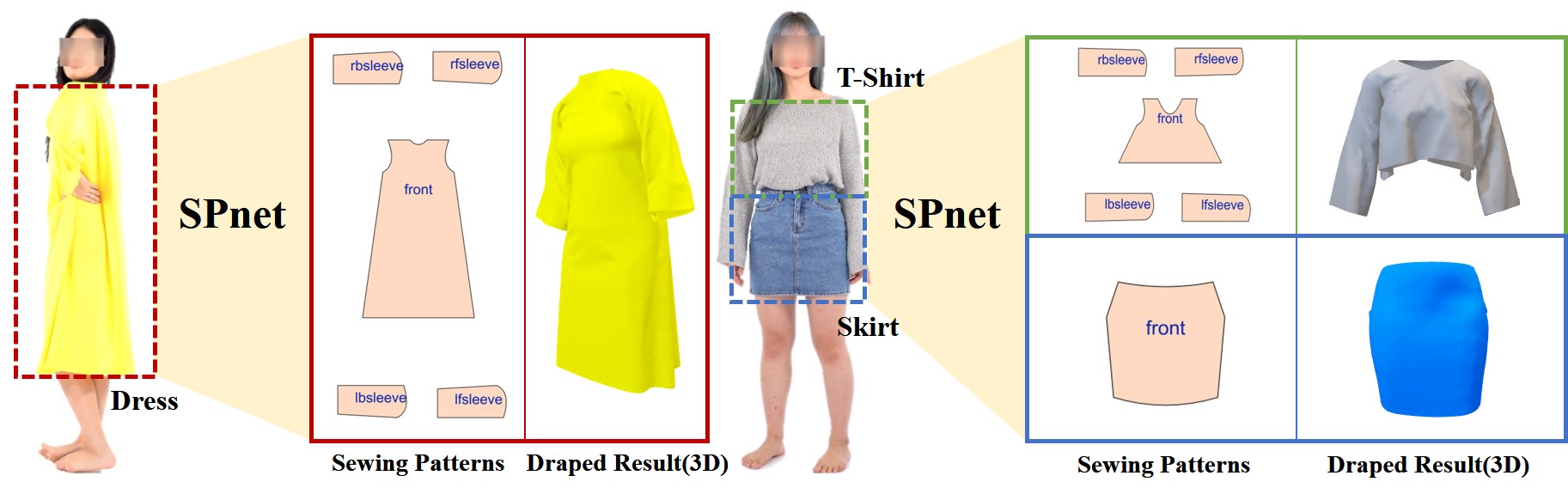

This paper presents a novel method for reconstructing 3D garment models from a single image of a posed user. Previous studies that have primarily focused on accurately reconstructing garment geometries to match the input garment image may often result in unnatural-looking garments when deformed for new poses. To overcome this limitation, our work takes a different approach by inferring the fundamental shape of the garment through sewing patterns from a single image, rather than directly reconstructing 3D garments.

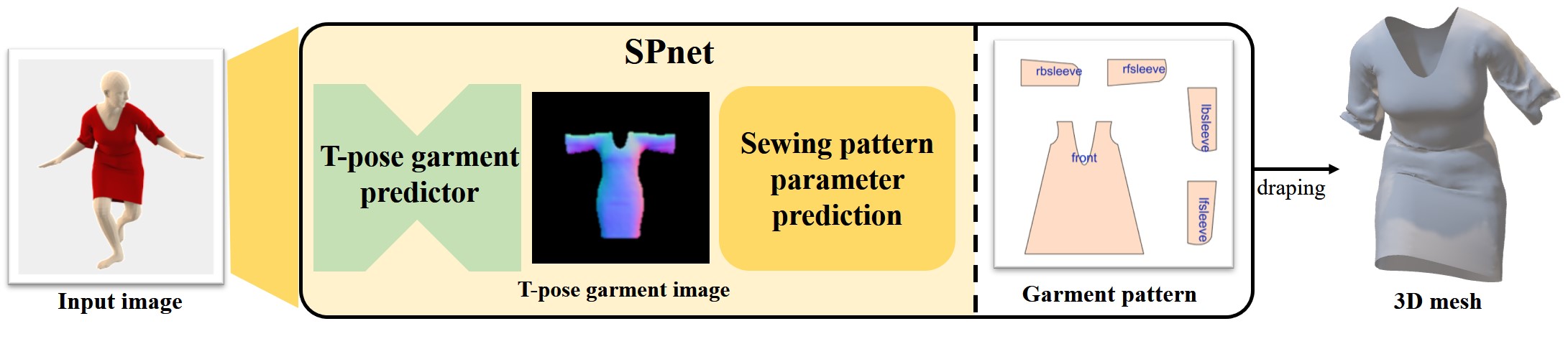

Our method consists of two stages. Firstly, given a single image of a posed user, it predicts the garment image worn on a T-pose, representing the baseline form of the garment. Then, it estimates the sewing pattern parameters based on the T-pose garment image. By simulating the stitching and draping of the sewing pattern using physics simulation, we can generate 3D garments that can adaptively deform to arbitrary poses. The effectiveness of our method is validated through ablation studies on the major components and a comparison with other methods.

Our goal is to predict garment sewing patterns from a single nearfront image of a person in an arbitrary pose. To address the challenge of predicting these patterns, we employ a two-step sequential approach consisting of T-Pose garment prediction and sewing pattern parameter prediction steps.

Our method can generate shapes that well reflect the appearance of the clothing in the input image and can express elements that represent natural clothes.